Third-Party GPT-4o Apps: The Truth & Why to Avoid Them in 2025

This detailed comparison between Third-Party GPT-4o Apps and ChatGPT is a part of our AI Tools Comparison Series, which explores the best tools shaping the AI landscape.

Introduction – Be Careful with Third-Party GPT-4o Apps

Official vs Third-Party GPT-4o Apps

Facts about real and third-party artificial intelligence applications in 2025.

GPT-4o is one of the most powerful artificial intelligence models available today, and OpenAI provides access to it through its official ChatGPT platform.

However, many third-party applications claim to offer “weekly”, “monthly”, or “ad hoc” subscriptions, or even “lifetime” or “one-time purchase” access to GPT-4o at incredibly low prices.

In addition, the offer is sometimes made immediately available to these third-party GPT-4o partners without a trial period, i.e., without the possibility of trying it out.

In fact, why would we pay for a service that is free to anyone at a basic level (OpenAI ChatGPT’s basic service is free), that third parties use in the same clever way to build their service, and that they then resell?

But is it too good to be true? Yes – and here’s why.

How These Apps Access GPT-4o

These third-party apps do not own or develop GPT-4o.

Instead, they use OpenAI’s API, which allows developers to integrate GPT-4o into their apps.

OpenAI charges per request, meaning the app developer pays a fee whenever you ask the AI something. This is a fact.

The Financial Reality: Why a One-Time Fee Makes No Sense

OpenAI’s API is a pay-as-you-go service. If an app offers unlimited GPT-4o for a one-time, for example, $50 payment, they would eventually run out of money.

To stay profitable, they must:

- Limit usage (e.g., daily message caps or slow response times)

- Use older or restricted AI models instead of true GPT-4o

- Sell user data or push aggressive ads to compensate for costs

- Shut down unexpectedly once they can no longer sustain the service

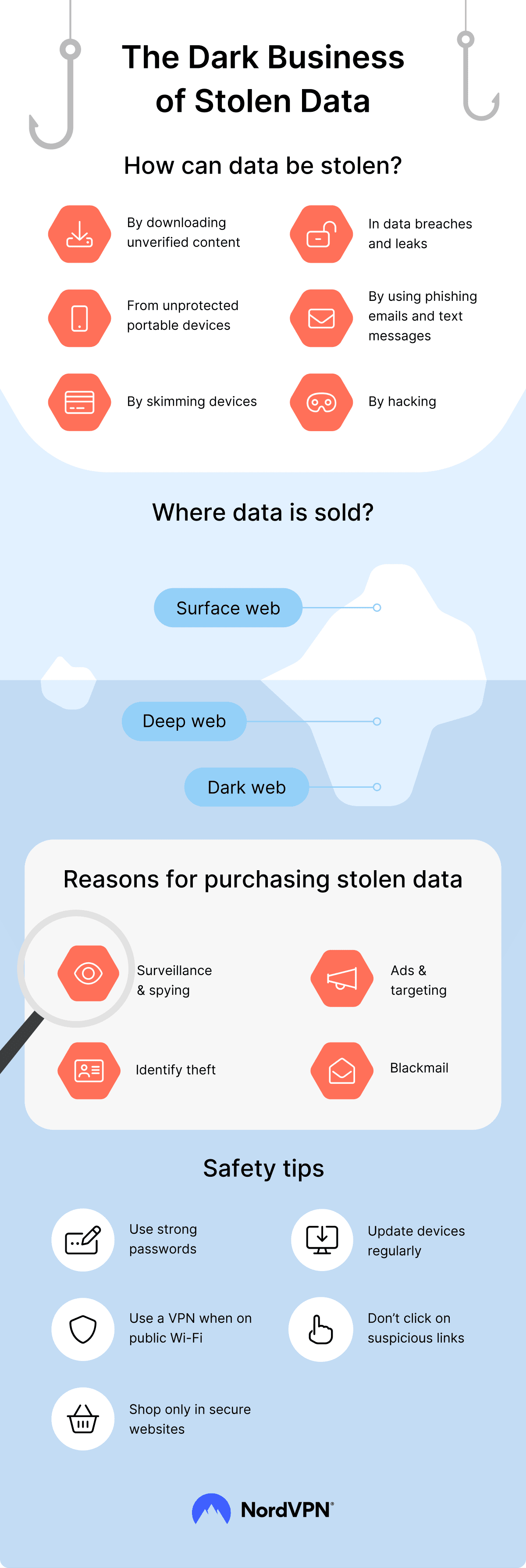

The Risks of Using Third-Party GPT-4o Apps

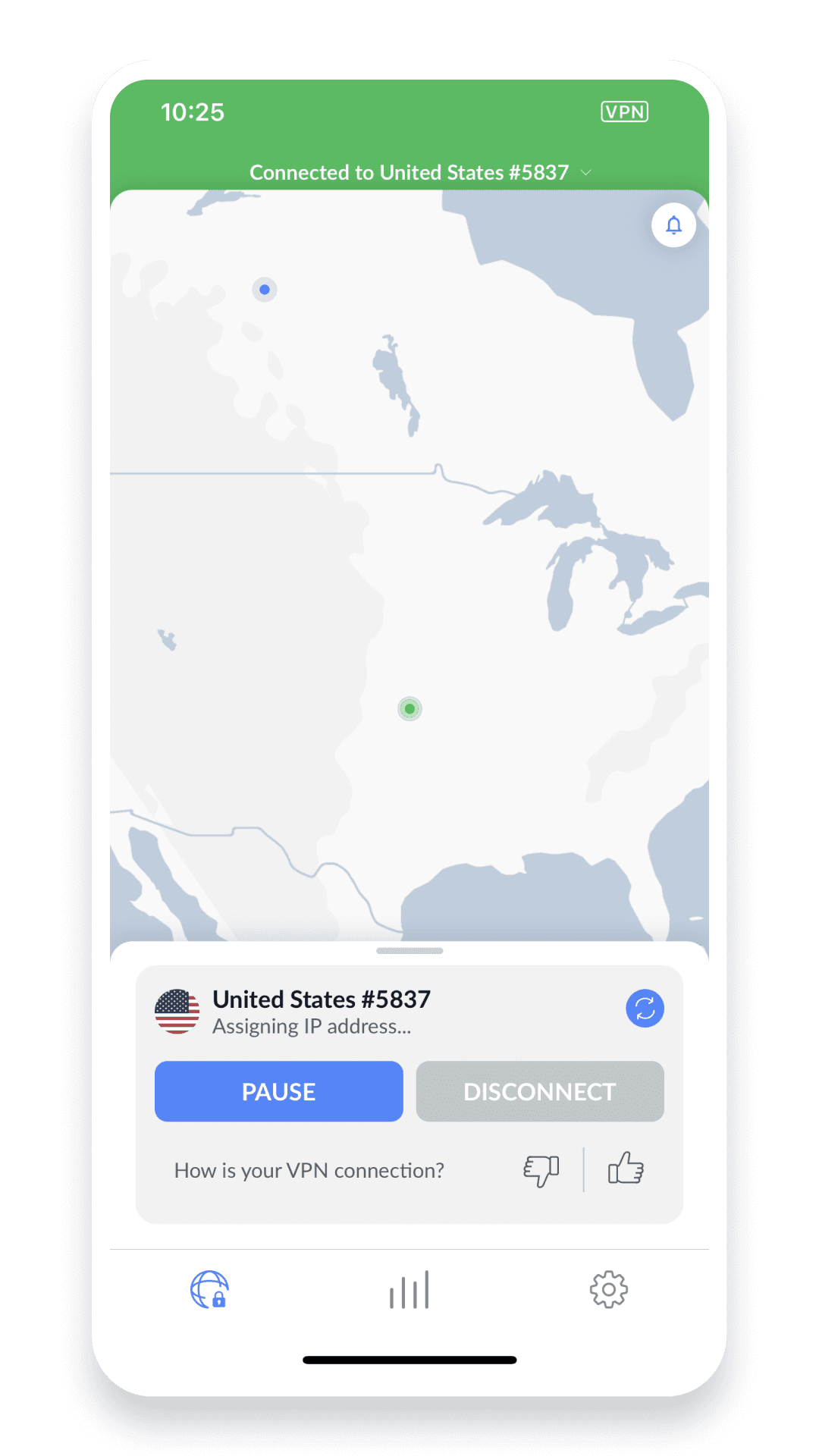

1. Data Privacy Concerns

When using an unofficial AI app, you don’t know how your data is stored, used, or potentially sold. OpenAI follows strict security policies, but third-party apps might not.

These third-party apps often lack clear privacy policies. Your data might be stored, misused, or even sold without your consent.

📌 Beyond AI apps, cybersecurity risks are growing in AI-based workflows. Learn more in our post on Cybersecurity in AI-Based Workflows.

2. Lack of Customer Support

Since these apps are unofficial, they rarely offer proper support. If something goes wrong, you have no guarantee of help.

In contrast, OpenAI, for example, provides official support for ChatGPT users, ensuring a seamless experience.

3. Poor AI Performance

Some apps throttle performance to cut costs, meaning you may experience slow or incomplete responses. You might also unknowingly be using an outdated AI model instead of GPT-4o.

4. Ethical Concerns & Misleading Marketing

Many of these apps advertise “lifetime GPT-4o access” when, in reality, they rely on an unsustainable API-based pricing model.

They often mislead users with exaggerated claims.

📌 These AI services raise serious ethical concerns. Should AI be used to mislead consumers? Read our deep dive on AI Ethics in Surveillance and Privacy.

5. Misinformation & AI-Generated Content

Some of these third-party apps even fabricate AI-generated reviews or misleading content to attract users.

This further contributes to the spread of AI-powered misinformation.

📌 With AI-generated content rising, misinformation is becoming a growing concern. Learn more in our post on The Rise of AI Generated Content.

Comparing OpenAI’s ChatGPT vs. Third-Party Apps

| Feature | OpenAI ChatGPT-4o (Official) | Third-Party GPT-4o Apps |

|---|---|---|

| Access | Free (with limits) or Plus ($20/month) | One-time fee or vague pricing |

| API Costs | No extra cost to users | Developers pay OpenAI per request |

| Reliability | Always up-to-date, no limits | May slow down or stop working |

| Data Privacy | OpenAI’s security policies | Unknown—data could be misused |

| Support & Updates | Direct from OpenAI | No guarantees or support |

❓ FAQs About Third-Party GPT-4o Apps

How do third-party apps access GPT-4o?

They integrate OpenAI’s GPT-4o via the official API on a pay-per-request basis, passing costs through limits, caps, or ads.

Are third-party GPT-4o apps legal?

Yes, using the API is legal, but many apps market capabilities or pricing in misleading ways and lack transparency.

Why is OpenAI’s ChatGPT a better choice?

It’s reliable, secure, updated frequently, and backed by clear policies, official support, and predictable access.

Will a third-party AI app work indefinitely?

Unlikely if it sells “lifetime” access; API usage has ongoing costs, so many such apps degrade or shut down.

What happens if a third-party app stops working?

You lose access and your one-time payment; refunds and continuity are rarely guaranteed.

Can third-party apps steal my data?

Potentially. Many have vague privacy policies, unclear storage practices, or monetize user data.

Do third-party GPT-4o apps have limits?

Most do—daily caps, throttling, reduced features, or older models to control costs.

How much does OpenAI charge for ChatGPT-4o?

There’s a free tier with limits, and ChatGPT Plus is typically $20/month for enhanced features and priority access.

Can I use GPT-4o without OpenAI’s official platform?

Yes, via trusted integrations using OpenAI’s API; beware apps that misrepresent model access or pricing.

Should I trust one-time payment AI services?

Be cautious. Sustainable AI access implies ongoing costs—“lifetime GPT-4o” is a red flag.

Conclusion – The Smarter Choice: Use OpenAI Directly

Instead of risking your money on an unreliable app, use OpenAI’s official ChatGPT platform. If you need more features, the Plus plan ($20/month) is a far better deal than gambling on a shady third-party app.

Final Thoughts

While some users fall for these “too-good-to-be-true” offers, informed users know that sustainable AI access isn’t free or permanent.

If you see an app offering “lifetime GPT-4o access” for cheap, think twice—you’re likely paying for an inferior, limited, or short-lived experience.

🔹 The truth is clear: Third-party GPT-4o apps are a trap. They promise the impossible—lifetime AI access for a one-time fee—but in reality, they exploit OpenAI’s tech, mislead users, and may even compromise your data.

🔥 Warning! If an AI app offers ‘unlimited GPT-4o for a one-time fee,’ it’s a red flag. Protect your money, data, and experience—stick to OpenAI’s official platform.

💡 Don’t let AI scams win. Stay informed, trust official sources, and share this post to protect others from falling into the same trap. Let’s hold these phantom AIs accountable. What’s your take on this? Drop a comment below!

What do you think? Have you encountered these misleading AI apps? Share your thoughts in the comments!

📚 Related Posts You May Be Interested In

- Ethics of AI in Surveillance and Privacy ⬈

- Cybersecurity in AI-Based Workflows ⬈

- ChatGPT vs Microsoft Copilot ⬈

- ChatGPT vs Bing AI ⬈

- The Rise of AI-Generated Content ⬈

This article is part of the AI Tools Comparison Series ⬈.

This article is also part of the Definitive Guide to Brilliant Emerging Technologies in the 21st Century ⬈.

For a broader overview of AI tools, explore ChatGPT vs. 11 Powerful AI Tools: Unlock Their Unique Features in 2024 ⬈.

This article is part of the AI Tools Comparison Series (Revolutionizing AI: Top Tools and Trends; it can be found here: Definitive Guide to Brilliant Emerging Technologies in the 21st Century).

Thanks for reading.

Resources – Be Careful with Third-Party GPT-4o Apps

1. OpenAI’s Official Blog & Documentation

🔗 OpenAI News ⬈

🔗 Overview – OpenAI API ⬈

- Details about GPT-4o, its features, pricing, and official access points.

- Clarifies how OpenAI licenses its models and what’s legit vs. misleading.

2. OpenAI API Pricing & Terms

🔗 ChatGPT Pricing – OpenAI ⬈

🔗 Terms of Use – OpenAI ⬈

- Explains official costs, proving that third-party “lifetime” access is suspicious.

- Highlights OpenAI’s restrictions and policies against misuse.

3. OpenAI’s Developer Forum & Community Discussions

🔗 OpenAI Developer Community ⬈

- Developers frequently discuss unauthorized resellers and scams.

4. Reddit Discussions (AI & Tech Scams)

🔗 Artificial Intelligence (AI) – Reddit ⬈

🔗 ChatGPT – Reddit ⬈

- Many real users report scam apps claiming to offer cheap GPT-4o access.

5. News Articles on AI Scams

🔗 Search: “AI chatbot scams 2025” on Google News

- Major tech sites like TechCrunch, Wired, and The Verge often report AI-related fraud.

6. Apple & Google App Store Policies

🔗 App Review Guidelines – Apple Developers ⬈

🔗 Developer Policy Center – Google Play ⬈

🔗 Google Play Policies and Guidelines – Transparency Center ⬈

- Both stores have policies against misleading AI apps, yet some still get through.

📢 Want to explore more about AI security, ethics, and its impact? Check out these related articles:

✅ Cybersecurity in AI-Based Workflows ⬈

✅ Ethics of AI in Surveillance and Privacy ⬈

✅ The Rise of AI-Generated Content: Threat or Opportunity in the 21st? ⬈

📌 Important note: I am neither an OpenAI reseller nor a representative – I get nothing from this analysis. This is an awareness raising to protect others.

ℹ️ Note: Due to the ongoing development of applications and websites, the actual appearance of the websites shown may differ from the images displayed here.

The cover image was created using Leonardo AI ⬈.