Create Sora-Style AI Videos for Free on Android & iOS in 2025

This detailed comparison, “How to Create Sora-Style AI Videos”, is a part of our AI Tools Comparison Series, which explores the best tools shaping the AI landscape.

🧠 Introduction – How to Create Sora-Style AI Videos

Written by SummitWiz & DigitalChronicle.info—Real tech, no hype.

AI video generation has taken a giant leap with OpenAI’s Sora, a next-gen model capable of producing cinematic videos from simple text prompts.

While OpenAI’s Sora isn’t directly available to the public, Microsoft’s strategic partnership gives it exclusive early access.

This allows Bing to offer lightweight video generation before Google’s Veo or other competitors catch up — a move that is not only technical, but also highly political in the AI race.

Yes, you read that right: with a smartphone and a Microsoft account, you can generate short AI videos “powered by OpenAI’s Sora” — but only in a very restricted format.

This article clears up the misconceptions, compares it with other AI video tools, and shows you how to try it yourself.

🔍 What Is OpenAI’s Sora?

Sora is OpenAI’s most advanced text-to-video AI model. It can create high-resolution, realistic, and complex video clips (up to 60 seconds) from natural language descriptions.

The technology blends multimodal deep learning, diffusion models, and vast training datasets to simulate cinematic scenes with motion, depth, lighting, and camera effects.

Use cases include short films, ads, scene prototyping, and content creation at scale. However, as of now, full access is only available to select partners, researchers, and a few demo users.

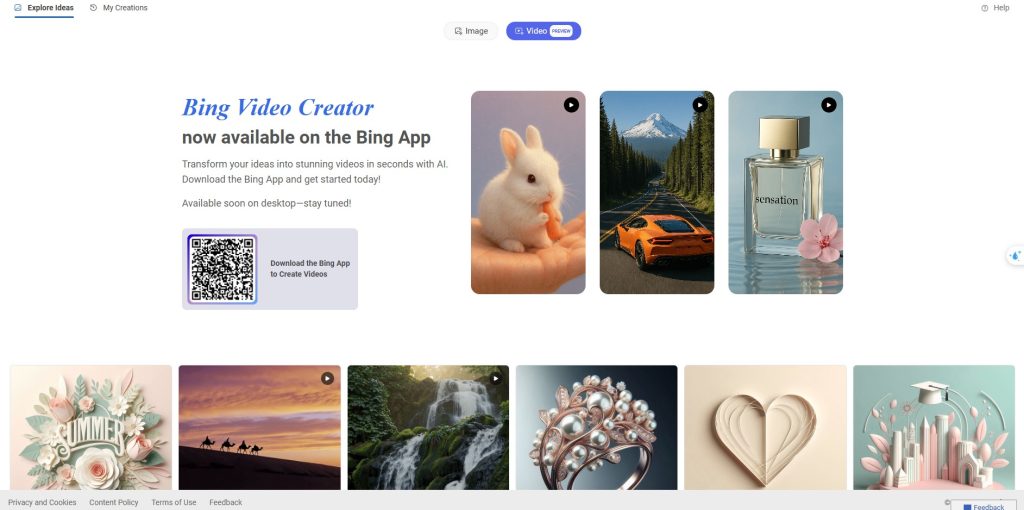

🧭 Microsoft’s Bing Video Creator: A Sora-Powered Lite Version

In partnership with OpenAI, Microsoft integrated a scaled-down version of Sora into the Bing mobile app.

This tool, called Bing Video Creator, allows you to generate 5-second AI videos on Android and iOS.

It uses Microsoft’s Copilot AI interface, where users enter a prompt, wait briefly, and receive a vertical (9:16) video clip.

This service is not the full Sora experience. Microsoft does not brand the app as “Sora” but explicitly states it is “powered by OpenAI’s Sora.”

💡 That distinction matters.

🔐 Important Limitations – Sora-Style AI Videos

- Video length: Fixed to 5 seconds.

- Orientation: Only vertical (9:16), ideal for Shorts, Reels, TikTok.

- Speed: 10 fast generations are available through Microsoft Rewards points; after that, rendering slows unless more points are redeemed.

- Platform: Only available through the Bing app on iOS and Android.

- No advanced controls: No sound, extended prompts, or cinematic pacing.

This is more of a public preview layer than a competent editing suite.

📊 Full Comparison: Sora vs Bing vs Runway vs Pika

| Feature | OpenAI Sora | Bing Video Creator | Runway ML Gen-2 | Pika Labs |

|---|---|---|---|---|

| Developer | OpenAI | Microsoft (OpenAI-powered) | Runway | Pika |

| Access | Private preview | Public (Bing app) | Free + paid plans | Free beta + paid tiers |

| Platform | Not public | Mobile (iOS & Android) | Web-based | Web & Discord |

| Prompt support | Complex, long prompts | Simple, short prompts | Mid-level | Basic, with modifiers |

| Duration | Up to 60 seconds | Fixed 5 seconds | Up to 16 seconds | Up to 10 seconds |

| Aspect ratio | Flexible (16:9, etc.) | Only 9:16 | 16:9, 9:16, square | Configurable |

| Audio | Planned | Not supported | Not supported | Limited beta support |

| Output quality | Cinematic | Moderate, mobile-optimized | High, creative | Stylized, improving |

| Watermark | No | No | Yes (free tier) | Yes (free tier) |

| Cost | Unavailable | Free, with Rewards for speed | Free (limited), Pro: $12+/mo | Free, Pro: $10–30/mo |

🌐 Clarifying Misunderstandings – Sora-Style AI Videos

Some recent tech headlines have created the impression that full access to OpenAI’s Sora is now available on mobile — which isn’t exactly the case.

While Sora’s core capabilities are now integrated into Microsoft’s Bing app, the user experience remains simplified.

This article helps clarify the distinction between the public Bing Video Creator and the full OpenAI Sora model so that readers—whether tech enthusiasts or content creators—can better understand the tools available to them.

🧰 Use Cases – What Can You Create with Sora-Style AI Videos

Despite its limitations, Bing Video Creator has some promising applications:

- Creating AI-powered TikToks, Shorts, and Reels

- Visualizing blog or news content quickly

- Social media hooks and experiments

- First step into the world of AI-driven motion content

❓ 10 FAQs – Create Sora-Style AI Videos

What is Sora, and how is it used in Bing?

A.: Sora is OpenAI’s advanced video model. Microsoft integrated it into the Bing app’s Video Creator feature for mobile users.

Do I need to pay to use Bing’s video generator?

No, it’s free. You only need a Microsoft account. To create faster videos, you must earn Microsoft Rewards points.

How long are the generated videos?

Videos are currently limited to 5 seconds in vertical (9:16) format.

Can I use this on a desktop or only on mobile?

Currently (June 2025), it works only on the Bing mobile app (iOS and Android).

Is the video generator available worldwide?

Yes, though some countries may have limited support. An updated Bing app and Microsoft account are required.

What kind of prompts work best?

Use clear, descriptive language—e.g., “A robot dancing in a cyberpunk city at night.”

Can I download the generated videos?

Yes, the Bing app allows direct downloads without watermarks.

How is this different from OpenAI’s original Sora demo?

This is a simplified, mobile-friendly version with limited duration and lower resolution.

Does it support audio?

No, currently it generates video only—no audio or voice support.

Can I edit or extend the videos later?

Not inside Bing. You’ll need external tools (like CapCut or DaVinci Resolve) to edit or combine them.

🧾 Conclusion and Summary – How to Create Sora-Style AI Videos

The ability to generate Sora-style videos directly from a mobile app represents a significant step in democratizing AI video tools.

Creators can free access OpenAI’s advanced video generation engine using Microsoft’s Bing app without needing special hardware or subscriptions.

Although current output is limited to 5-second clips, this opens the door to rapid content ideation, short-form storytelling, and even mobile-based AI filmmaking.

This is a simple, powerful starting point for anyone curious about the future of video generation.

📚 Related Posts You May Be Interested In

👉 Curious about AI video tools? Check out Leonardo Integrates Veo 3: The AI Video Revolution Just Got Real ⬈.

👉 For a direct comparison, read Adobe Firefly vs Canva: Which Creative AI Tool Wins? ⬈.

👉 To understand broader AI workflows, explore VPNs in AI Workflows: Secure and Resilient Operations ⬈.

👉 This article is also part of the Definitive Guide to Brilliant Emerging Technologies in the 21st Century ⬈.

👉 For a broader overview of AI tools, explore ChatGPT vs. 11 Powerful AI Tools: Unlock Their Unique Features in 2024 ⬈.

Thanks for reading.

📚 Resources – Create Sora-Style AI Videos

- OpenAI – Sora Official Overview ⬈

The official page introducing OpenAI’s powerful Sora video generation model. - Free AI Video Generator – Bing Video Creator ⬈

Bing Video Creator – now available on the Bing App! - The Verge – Microsoft integrates Sora into Bing ⬈

A first look at Microsoft’s use of Sora technology inside its mobile app. - You can now generate OpenAI Sora videos for free on iOS and Android – but only if you’re prepared to use Microsoft Bing ⬈

A practical walkthrough of Bing’s video feature, based on OpenAI’s Sora. - Runway ML – Gen-2 Research and Demos ⬈

Explore Runway’s Gen-2 model for text-to-video generation. - Leonardo AI – Creative Tools & Motion Features ⬈

Leonardo’s image and video generation platform supports Veo 3-like capabilities.

ℹ️ Note: Due to the ongoing development of applications and websites, the actual appearance of the websites shown may differ from the images displayed here.

The cover image was created using OpenAI ChatGPT.

The “Full Comparison” image was created using Leonardo AI ⬈.